To regulate artificial intelligence, or AI, it is important to look at the various, and often contradictory, settings where AI operates – and how it is treated there. For example, data privacy generally and in particular open banking, in which financial data are shared with third parties like technology providers, are already regulated, and it would be logical to also regulate the use of AI in those contexts. Yet, regulating AI in a general way is not the right approach, as it is used so differently in different sectors, where different values are at stake and different problems arise. Therefore, sector-specific rules, along with more research and debate, are needed. In this post, I consider AI in the financial services industry, where it is probably used most.

Artificial Intelligence Is Not A Technology … It Is the Journey[2]

AI is a constellation of different technologies working together to enable machines to sense, comprehend, act, and learn with human-like levels of intelligence. Machine learning and natural language processing, for example, use AI. And like all such technologies, they are evolving along their own path and, when applied in combination with data, analytics and automation, they can help businesses achieve each of their goals, be it improving customer service or optimizing the supply chain.[3]

The White House AI Bill of Rights

In its Blueprint for an AI Bill of Rights, the Biden administration articulated a set of principles and policies that I have chosen to use as a guideline for global principles . “Among the great challenges posed to democracy today is the use of technology, data, and automated systems in ways that threaten the rights of the American Public” .[4]

Safe and Effective Systems

Have financial institutions, in adopting AI, identified concerns and potential impacts through testing and ongoing monitoring? We know from past experience that new systems can have unintended but foreseeable, and often negative, impacts Context is also important. Using, say, ChatGPT in medical or legal applications may require very different levels of testing and transparency than it would when used for entertainment purposes

Algorithmic Discrimination Protections

Another risk of AI is that it will reinforce or even increase inequities in society. It’s important to consider how AI can avoid discrimination based on race, gender, and similar characteristics. It is not a theoretical question: plain language is critical. The promoters of AI have the responsibility to evaluate disparity testing and mitigation.

AI can be undeniably valuable. Machine Learning algorithms can make informed decisions about credit risks and asset management. AI can advance efficiency, health, and economic growth. But it should be fairly applied.

Data Privacy

AI also adds value by using data with unprecedented efficiency and speed. We should be protected from abusive data practices: people and institutions need to remain in control of their data and their permission for its use should be explicit, even if it delays the progress of AI. That requires plain language understandable by people. That requires pre-deployment assessment and surveillance.

Notice and Explanation

This is where transparency becomes critical. We should know that an automated system is being used, and the explanations should be readable, accessible, and updated. They should also be public, audited, and transparent. That includes communicating why an AI solution was chosen, how it was designed and developed, on what grounds it was deployed, how it’s monitored and updated, and the conditions under which it may be retired. There are four specific effects of building transparency:

- it decreases the risk of error and misuse,

- it distributes responsibility,

- it enables internal and external oversight, and

- it expresses respect for people.

Transparency is not an all-or-nothing proposition, however. Companies need to find the right balance on how transparent to be with which stakeholders[5]

Human Considerations: An Opt Out?

Automated systems touch on personal subjects like money, health, and education, and the industry needs to reassure people and show them that those systems are safe and closely monitored. But it also needs to point out that AI has clear benefits, that it can improve customer service by providing personalized recommendations and advice.

The human factors approach puts a premium on people and designing how AI so that it promotes human autonomy through high levels of automation, i.e. human autonomy and machine autonomy.[6]

Geographic Fragmentation

In addition to the vast differences in how AI is used in various sectors, one must consider the differences in how various countries treat the technology.

US Regulatory Steps

In the U.S., regulating AI is in Its early days. While there has been a flurry of activity by the White House and lawmakers, rules for the technology remain distant.[7] What’s more, several federal agencies are taking contrasting approaches that are fragmenting the regulatory landscape. Central banks and regulators still have to produce a regulatory AI framework. Here are the most recent initiatives.

- October 2021: FTC safeguard ules

- May 2022: CFPB circular to address notice requirements of the Equal Credit Opportunity Act.

- June 2022: American Data Private Protection Act.

- October 2022: AI Bill of Rights from the White House.

- February 2023: Data Privacy Act of 2023.

EU Regulatory Steps

The EU AI Act is set to become the world’s first comprehensive legal framework for artificial intelligence. Originally proposed by the European Commission in April 2021, the general policy was adopted by the European Council last year, and the European Parliament just adopted its version in mid-June. Now the three will negotiate the final details before the policy becomes law — in a process called ”trilogue,” a three-way negotiation. After a final agreement, the law could become enforceable within the next few years.[8]

Japan Regulation

As it did for crypto regulations, Japan has chosen “soft regulation.” In 2019, the Japanese government published the Social Principles of Human-Centric AI as principles for implementing AI in society. The social principles set forth three basic philosophies: human dignity, diversity and inclusion, and sustainability. It is important to note that the goal of the principles is not to restrict the use of AI but rather to use it to help realize those principles. This corresponds to the structure of the Organization for Economic Cooperation and Development’s (OECD) AI principles.[9]

China Regulation

Beijing is leading the way in AI regulation, releasing groundbreaking strategies to govern algorithms, chatbots, and more. Global partners need a better understanding of what, exactly, this regulation entails, what it says about China’s AI priorities, and what lessons other AI regulators can learn. These include measures governing recommendation algorithms – the most widespread form of AI on the internet – as well as new rules for synthetically generated images and chatbots in the mold of ChatGPT. China’s emerging AI governance framework will reshape how the technology is built and deployed within China and internationally, affecting both Chinese technology exports and global AI research networks.[10]

Soon after China’s artificial intelligence rules came into effect last month, a series of new AI chatbots began trickling onto the market, with government approval. The rules have already been watered down from what was initially proposed, and so far, China hasn’t enforced them as strictly as it could. China’s regulatory approach will likely have huge implications for the technological competition between the country and its AI superpower rival, the U.S.[11]

UK Regulation

The UK government published its AI White Paper on March 29, 2023, setting out its proposals for AI regulation. The paper is a continuation of the AI Regulation Policy Paper, which introduced the UK government’s vision for the future “pro-innovation” and “context-specific” AI regulatory regime in the United Kingdom.[12]

The white paper proposes an approach different from that of the EU’s AI Act. Instead of introducing new far-reaching legislation, the government is focusing on setting expectations for the development and use of AI while empowering regulators like the Information Commissioner’s Office , the Financial Conduct Authority, and Competition and Markets Authority to issue guidance and regulate the use of AI within their remit.

Banks and Financial Institutions Need Further Clarity

“Banks and financial institutions need further clarification in three areas,” says Irene Solaiman, policy director at Hugging Face, which conducts social impact research and leads public policy.

- Transparency: Lawmakers must define the legal requirement for transparency and how to document data sets, models, and processes.

- Model access: Whether a model is open or closed source, researchers need access to systems to conduct evaluations and build safeguards.

- Impact assessments: The research community currently doesn’t have standards for what evaluation looks like.[13]

Barry Diller, the chair of IAC and Expedia, called for a law to protect published material from capture in AI knowledge bases. “Fair use needs to be redefined, because what they have done is sucked up everything and that violates the basis of the copyright law,” Diller said on CNBC’s Squawk Box. “All we want to do is establish that there is no such thing as fair use for AI, which gives us standing.”[14]

Financial Institutions and AI

For years, the financial services industry has sought to automate its processes, ranging from back-end compliance work to customer service. But the explosion of AI has opened up new possibilities and potential challenges for financial services firms.

“For financial institutions, AI lets organizations accelerate and automate historically manual and time-consuming tasks like market research. AI can quickly analyze large volumes of data to identify trends and help forecast future performance, letting investors chart investment growth and evaluate potential risk.”[15]

Corporate and investment banks first adopted AI and machine learning decades ago, well before other industries caught on. Trading teams have used machine learning models to derive and predict trading patterns, and they’ve used natural-language processing to read tens of thousands of pages of unstructured data in securities filings and corporate actions to figure out where a company might be headed.[16]

AI could be the next big systemic risk to the financial system. In 2020, Gary Gensler (current chair of the SEC) co-wrote a paper about deep learning and financial stability. It concluded that just a few AI companies will build the foundational models that underpin the tech tools that lots of businesses will come to rely on, based on how network and platform effects have benefited tech giants in the past. [17]

The Role of Central Banks

The European Central Bank is experimenting with generative artificial intelligence across its operations to speed up basic activities, from drafting briefings and summarizing banking data to writing software code and translating documents. The move comes as central banks cautiously explore how to harness the latest advances in AI, including large-language models such as ChatGPT, though they stress it is a long way before AI can be trusted to help set interest rates and other monetary standards.[18]

The U.S. Federal Reserve’s dual mandate is to promote maximum employment and stable prices. When firms deploy technologies that make workers more productive, they create the conditions for greater wage growth consistent with stable prices. And the labor market adjustment that follows as the economy adapts to technical change can affect maximum employment.[19]

Japan’s three biggest financial groups are joining the generative AI bandwagon, moving to adopt AI-powered chatbots to help with reports and other internal tasks. AI bots are trained on massive bodies of text to give sophisticated answers to user prompts.[20] However, one would find it impossible to find a Bank of Japan policy paper on AI.

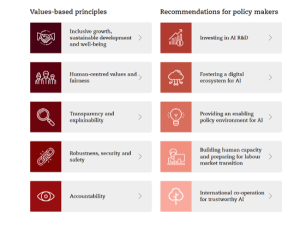

The OECD AI Principles

The OECD elaborated principles that were approved by all its members. This table summarizes them.

At the summit called on November 5 and 6, 2023, by British Prime Minister Rishi Sunak, leaders from 28 nations – including China – signed the Bletchley Declaration, a joint statement acknowledging the technology’s risks. The U.S. and Britain both announced plans to launch their own AI safety institutes, and two more summits were announced to take place in South Korea and France next year. But while some consensus has been reached on the need to regulate AI, disagreements remain over exactly how that should happen – and who will lead such efforts.[22]

First Orientations for Policymakers and Regulators

“It’s frankly a hard challenge,” SEC Chair Gary Gensler said of how to regulate AI. “It’s a hard financial stability issue to address because most of our regulation is about individual institutions, individual banks, individual money market funds, individual brokers; it’s just in the nature of what we do. And this is about a horizontal [matter whereby] many institutions might be relying on the same underlying base model or underlying data aggregator.”[23]

- AI has become a fashion (as was blockchain under Bitcoin) but is not a technology that deserves a single regulatory approach.

- The problems raised by AI are more about policy than regulation.

- Regulation should be at the level of individual segments, an approach that UK regulators are taking.

- It would be counterproductive to create AI regulations that may contradict existing regulations.

- The financial services industry is using AI to improve its infrastructure and interactions with customers.

- It is necessary to stop considering AI generally and look at the changes occurring in its components.

ENDNOTES

[1] https://www.emergenresearch.com/industry-report/ai-in-banking-market#:~:text=The%20global%20artificial%20intelligence%20(AI,42.9%25%20during%20the%20forecast%20period.#:~:text=The%20global%20artificial%20intelligence%20(AI,42.9%25%20during%20the%20forecast%20period.

[2] https://www.forbes.com/sites/cognitiveworld/2018/11/01/artificial-intelligence-is-not-a-technology/?sh=835e8795dcb6

[3] https://www.accenture.com/us-en/insights/artificial-intelligence-summary-index?c=acn_glb_semcapabilitiesgoogle_13637429&n=psgs_0523&gclid=EAIaIQobChMI4KGD-tvLgQMVfojCCB16MAC0EAAYASAAEgJaJfD_BwE&gclsrc=aw.ds

[4]https://www.whitehouse.gov/ostp/ai-bill-of-rights/

[5] https://hbr.org/2022/06/building-transparency-into-ai-projects

[6] https://www.york.ac.uk/assuring-autonomy/demonstrators/human-factors-artificial-intelligence/#:~:text=The%20human%20factors%20(HF)%20approach,human%20autonomy%20and%20machine%20autonomy.

[7] https://www.nytimes.com/2023/07/21/technology/ai-united-states-regulation.html

[8] https://hai.stanford.edu/news/analyzing-european-union-ai-act-what-works-what-needs-improvement#:~:text=The%20EU%20AI%20Act%20is,its%20position%20in%20mid%2DJune.

[9] https://www.csis.org/analysis/japans-approach-ai-regulation-and-its-impact-2023-g7-presidency

[10] https://carnegieendowment.org/2023/07/10/china-s-ai-regulations-and-how-they-get-made-pub-90117

[11] https://time.com/6314790/china-ai-regulation-us/

[12] https://www.mayerbrown.com/en/perspectives-events/publications/2023/07/uks-approach-to-regulating-the-use-of-artificial-intelligence

[13] https://hai.stanford.edu/news/analyzing-european-union-ai-act-what-works-what-needs-improvement#:~:text=The%20EU%20AI%20Act%20is,its%20position%20in%20mid%2DJune.

[14] https://www.msn.com/en-us/money/companies/barry-diller-rips-wga-deal-with-studios-says-fair-use-needs-to-be-redefined-to-address-ai/ar-AA1hitqI?ocid=entnewsntp&cvid=afbc8faab3184886ab4c02e80bfb1753&ei=13

[15] https://kpmg.com/us/en/articles/2023/generative-ai-finance.html?utm_source=google&utm_medium=cpc&utm_campaign=7014W0000024EDCQA2&cid=7014W0000024EDCQA2&gclid=EAIaIQobChMIyYmfm9_LgQMVE9fICh15xgvgEAAYBCAAEgJRY_D_BwE

[16] https://www.mckinsey.com/industries/financial-services/our-insights/been-there-doing-that-how-corporate-and-investment-banks-are-tackling-gen-ai

[17] https://www.nytimes.com/2023/08/07/business/dealbook/sec-gensler-ai.html

[18] https://www.ft.com/content/58e4cafe-cb57-4bca-ad4f-b43a98d658df?segmentId=114a04fe-353d-37db-f705-204c9a0a157b

[19] https://www.federalreserve.gov/newsevents/speech/cook20230922a.htm

[20] https://asia.nikkei.com/Business/Finance/Japan-s-top-banks-tap-AI-chatbots-to-lighten-workload#:~:text=TOKYO%20%2D%2D%20Japan’s%20three%20biggest,sophisticated%20answers%20to%20user%20prompts.

[21] https://oecd.ai/en/ai-principles

[22] https://www.reuters.com/technology/ai-summit-start-global-agreement-distant-hope-2023-11-03/

[23] https://www.ft.com/content/8227636f-e819-443a-aeba-c8237f0ec1ac

This post comes to us from Georges Ugeux, who is the chairman and CEO of Galileo Global Advisors and teaches international finance at Columbia Law School.

Sky Blog

Sky Blog